Josh Bersin的文章《人工智能实施越来越像传统IT项目》提出了五个主要发现:

- 数据管理:强调数据质量、治理和架构在AI项目中的重要性,类似于IT项目。

- 安全和访问管理:突出AI实施中强大的安全措施和访问控制的重要性。

- 工程和监控:讨论了持续工程支持和监控的需求,类似于IT基础设施管理。

- 供应商管理:指出了AI项目中彻底的供应商评估和选择的重要性。

- 变更管理和培训:强调了有效变更管理和培训的必要性,这对AI和IT项目都至关重要。

原文如下,我们一起来看看:

As we learn more and more about corporate implementations of AI, I’m struck by how they feel more like traditional IT projects every day.

Yes, Generative AI systems have many special characteristics: they’re intelligent, we need to train them, and they have radical and transformational impact on users. And the back-end processing is expensive.

But despite the talk about advanced models and life-like behavior, these projects have traditional aspects. I’ve talked with more than a dozen large companies about their various AI strategies and I want to encourage buyers to think about the basics.

Finding 1: Corporate AI projects are all about the data.

Unlike the implementation of a new ERP system, payroll system, recruiting, or learning platform, an AI platform is completely

data dependent. Regardless of the product you’re buying (an intelligent agent like

Galileo™, an intelligent recruiting system like Eightfold, or an AI-enabling platform to provide sales productivity), success depends on your data strategy. If your enterprise data is a mess, the AI won’t suddenly make sense of it.

This week I read a story about

Microsoft’s Copilot promoting election lies and conspiracy theories. While I can’t tell how widespread this may be, it simply points out that “you own the data quality, training, and data security” of your AI systems.

Walmart’s

My Assistant AI for employees already proved itself to be 2-3x more accurate at handling employee inquiries about benefits, for example. But in order to do this the company took advantage of an amazing IT architecture that brings all employee information into a single profile, a mobile experience with years of development, and a strong architecture for global security.

One of our clients, a large defense contractor, is exploring the use of AI to revolutionize its massive knowledge management environment. While we know that Gen AI can add tremendous value here, the big question is “what data should we load” and how do we segment the data so the right people access the right information? They’re now working on that project.

During our design of Galileo we spent almost a year combing through the information we’ve amassed for 25 years to build a corpus that delivers meaningful answers. Luckily we had been focused on data management from the beginning, but if we didn’t have a solid data architecture (with consistent metadata and information types), the project would have been difficult.

So core to these projects is a data management team who understands data sources, metadata, and data integration tools. And once the new AI system is working, we have to train it, update it, and remove bias and errors on a regular basis.

Finding 2: Corporate AI projects need heavy focus on security and access management.

Let’s suppose you find a tool, platform, or application that delivers a groundbreaking solution to your employees. It could be a sales automation system, an AI-powered recruiting system, or an AI application to help call center agents handle problems.

Who gets access to what? How do you “layer” the corpus to make sure the right people see what they need? This kind of exercise is the same thing we did at IBM in the 1980s, when we implemented this complex but

critically important system called RACF. I hate to promote my age, but RACF designers thought through these issues of data security and access management many years ago.

AI systems need a similar set of tools, and since the LLM has a tendency to “consolidate and aggregate” everything into the model, we may need multiple models for different users.

In the case of HR, if build a talent intelligence database using Eightfold, Seekout, or Gloat which includes job titles, skills, levels, and details about credentials and job history, and then we decide to add “salary” … oops.. well all of a sudden we have a data privacy problem.

I just finished an in-depth discussion with SAP-SuccessFactors going through the AI architecture, and what you see is a set of “mini AI apps” developed to operate in Joule (SAP’s copilot) for various use cases. SAP has spent years building workflows, access patterns, and various levels of user security. They designed the system to handle confidential data securely.

Remember also that tools like ChatGPT, which access the internet, can possibly import or leak data in a harmful way. And users may accidentally use the Gen AI tools to create unacceptable content, dangerous communications, and invoke other “jailbreak” behaviors.

In your talent intelligence strategy, how will you manage payroll data and other private information? If the LLM uses this data for analysis we have to make sure that only appropriate users can see it.

Finding 3: Corporate AI projects need focus on “prompt engineering” and system monitoring.

In a typical IT project we spend a lot of time on the user experience. We design portals, screens, mobile apps, and experiences with the help of UI designers, artists, and craftsmen. But in Gen AI systems we want the user to “tell us what they’re looking for.” How do we train or support the user in prompting the system well?

If you’ve ever tried to use a support chatbot from a company like Paypal you know how difficult this can be. I spent weeks trying to get Paypal’s bot to tell me how to shut down my account, but it never came close to giving me the right answer. (Eventually I figured it out, even though I still get invoices from a contractor who has since deceased!)

We have to think about these issues. In our case, we’ve built a “prompt library” and series of workflows to help HR professionals get the most out of Galileo to make the system easy to use. And vendors like Paradox, Visier (Vee), and SAP are building sophisticated workflows that let users ask a simple question (“what candidates are at stage 3 of the pipeline”) and get a well formatted answer.

If you ask a recruiting bot something like “who are the top candidates for this position” and plug it into the ATS, will it give you a good answer? I’m not sure, to be honest – so the vendors (or you) have to train it and build workflows to predict what users will ask.

This means we’ll be monitoring these systems, looking at interactions that don’t work, and constantly tuning them to get better.

A few years ago I interviewed the VP of Digital Transformation at DBS (Digital Bank of Singapore), one of the most sophisticated digital banks in the world. He told me they built an entire team to watch every click on the website so they could constantly move buttons, simplify interfaces, and make information easier to find. We’re going to need to do the same thing with AI, since we can’t really predict what questions people will ask.

Finding 4: Vendors will need to be vetted.

The next “traditional IT” topic is going to be the vetting of vendors. If I were a large bank or insurance company and I was looking at advanced AI systems, I would scrutinize the vendor’s reputation and experience in detail. Just because a firm like OpenAI has built a great LLM doesn’t mean that they, as a vendor, are capable of meeting your needs.

Does the vendor have the resources, expertise, and enterprise feature set you require? I recently talked with a large enterprise in the middle east who has major facilities in Saudi Arabia, Dubai, and other countries in the region. They do not and will not let user information, queries, or generated data leave their jurisdiction. Does the vendor you select have the ability to handle this requirement? Small AI vendors will struggle with these issues, leading IT to do risk assessment in a new way.

There are also consultants popping up who specialize in “bias detection” or testing of AI systems. Large companies can do this themselves, but I expect that over time there will be consulting firms who help you evaluate the accuracy and quality of these systems. If the system is trained on your data, how well have you tested it? In many cases the vendor-provided AI uses data from the outside world: what data is it using and how safe is it for your application?

Finding 5: Change management, training, and organization design are critical.

Finally, as with all technology projects, we have to think about change management and communication. What is this system designed to do? How will it impact your job? What should you do if the answers are not clear or correct? All these issues are important.

There’s a need for user training. Our experience shows that users adopt these systems quickly, but they may not understand how to ask a question or how to interpret an answer. You may need to create prompt libraries (like Galileo), or interactive conversation journeys. And then offer support so users can resolve answers which are wrong, unclear, or inconsistent.

And most importantly of all, there’s the issue of roles and org design. Suppose we offer an intelligent system to let sales people quickly find answers to product questions, pricing, and customer history. What is the new role of sales ops? Do we have staff to update and maintain the quality of the data? Should we reorganize our sales team as a result?

We’ve already discovered that Galileo really breaks down barriers within HR, for example, showing business partners or HR leaders how to handle issues that may be in another person’s domain. These are wonderful outcomes which should encourage leaders to rethink how the roles are defined.

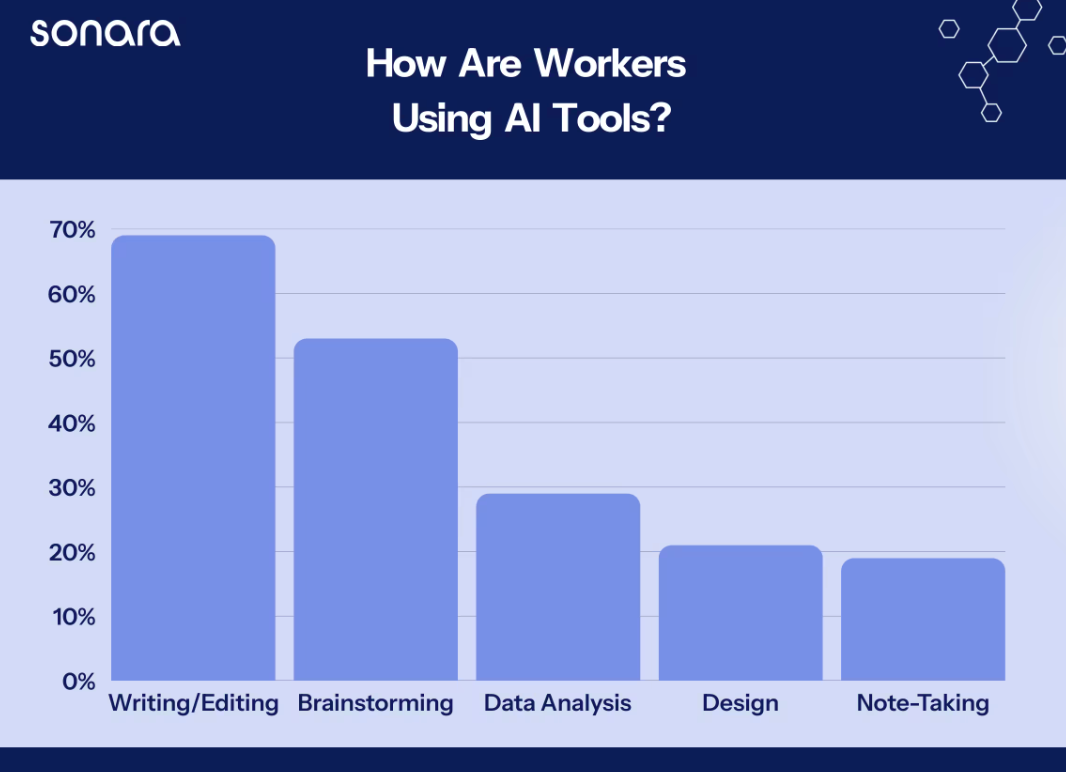

In our company, as we use AI for our research, I see our research team operating at a higher level. People are sharing information, analyzing cross-domain information more quickly, and taking advantage of interviews and external data at high speed. They’re writing articles more quickly and can now translate material into multiple languages.

Our member support and advisory team, who often rely on analysts for expertise, are quickly becoming consultants. And as we release Galileo to clients, the level of questions and inquiries will become more sophisticated.

This process will happen in every sales organization, customer service organization, engineering team, finance, and HR team. Imagine the “new questions” people will ask.

Bottom Line: Corporate AI Systems Become IT Projects

At the end of the day the AI technology revolution will require lots of traditional IT practices. While AI applications are groundbreaking powerful, the implementation issues are more traditional than you think.

I will never forget the failed implementation of Siebel during my days at Sybase. The company was enamored with the platform, bought, and forced us to use it. Yet the company never told us why they bought it, explained how to use it, or built workflows and job roles to embed it into the company. In only a year Sybase dumped the system after the sales organization simply rejected it. Nobody wants an outcome like that with something as important as AI.

As you learn and become more enamored with the power of AI, I encourage you to think about the other tech projects you’ve worked on. It’s time to move beyond the hype and excitement and think about real-world success.